Creating Production GKE Cluster with Terraform¶

In Module 2 Assignment with created project $ORG-$PRODUCT-$ENV -> $student_name-notepad-dev with custom VPC using terraform. We've also used best practices for naming conventions and stored state of the terraform in GCS backend with versioning.

In Module 3 Assignment we've create GKE Production Cluster with gcloud, this is a first good step to start automating your Infrastructure environment. Next logical step is to take those gcloud as a basis building Terraform resources.

In Module 4 we going to continue use infrastructure that we've created in Module 2 and going to create Production GKE Clusters in it.

Objective:

- Create Subnet, Cloud Nat with Terraform

- Create GKE Regional, Private Standard Cluster with Terraform

- Delete custom Node Pool and Create Custom Node Pool with Terraform

- Create GKE Autopilot Cluster with Terraform

1 Creating Production GKE Cluster¶

Prepare Lab Environment¶

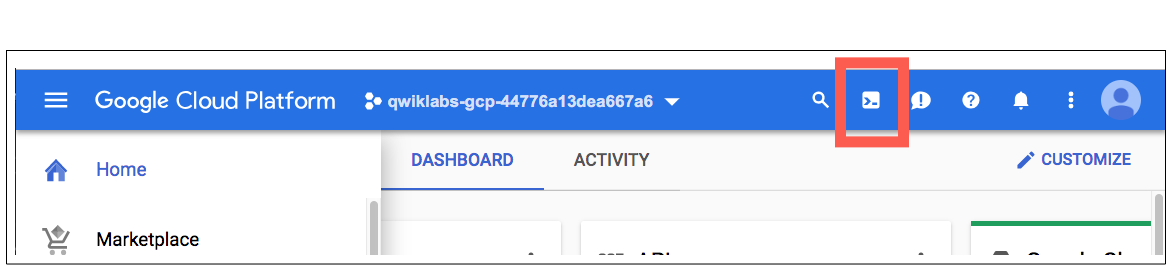

This lab can be executed in you GCP Cloud Environment using Google Cloud Shell.

Open the Google Cloud Shell by clicking on the icon on the top right of the screen:

Once opened, you can use it to run the instructions for this lab.

1.1 Locate Module 4 Assignment¶

Step 1 Locate your personal Google Cloud Source Repository:

export student_name=<write_your_name_here_and_remove_brakets>

Note

Replace $student_id with your ID

cd ~/$student_name-notepad

git pull # Pull latest code from you repo

Step 2 Create ycit020_module4 folder from you ycit020_module2

Important

Consider to finish all tasks of Module2 before doing this step.

cp -r ycit020_module2 ycit020_module4

Step 3 Commit ycit020_module4 folder using the following Git commands:

cd ~/$student_name-notepad

git status

git add .

git commit -m "adding documentation for ycit020 module 4 assignment"

Step 6 Once you've committed code to the local repository, add its contents to Cloud Source Repositories using the git push command:

git push origin master

1.2 Create a user-managed subnet with terraform¶

Using google_compute_subnetwork resource create a user-managed subnet with terraform.

Locate notepad-infrastructure folder where we going to continue creating GCP service Layer using terraform configuration:

cd ~/$student_name-notepad/ycit020_module4/notepad-infrastructure

cat <<EOF >> subnets.tf

resource "google_compute_subnetwork" "gke_standard_subnet" {

name = format("gke-standard-%s-%s-%s-subnet", var.org, var.product, var.environment)

network = google_compute_network.vpc_network.self_link

region = var.gcp_region

project = var.gcp_project_id

ip_cidr_range = var.network_cidr

secondary_ip_range {

range_name = var.pods_cidr_name

ip_cidr_range = var.pods_cidr

}

secondary_ip_range {

range_name = var.services_cidr_name

ip_cidr_range = var.services_cidr

}

}

resource "google_compute_subnetwork" "gke_auto_subnet" {

name = format("gke-auto-%s-%s-%s-subnet", var.org, var.product, var.environment)

network = google_compute_network.vpc_network.self_link

region = var.gcp_region

project = var.gcp_project_id

ip_cidr_range = var.network_auto_cidr

secondary_ip_range {

range_name = var.pods_auto_cidr_name

ip_cidr_range = var.pods_auto_cidr

}

secondary_ip_range {

range_name = var.services_auto_cidr_name

ip_cidr_range = var.services_auto_cidr

}

}

EOF

Note

Notice power of terraform outputs. Here we link subnet with our VPC network using google_compute_network.vpc_network.self_link output value of created network in previous step.

Define variables:

cat <<EOF >> variables.tf

# variables used to create VPC subnets

variable "network_cidr" {

type = string

}

variable "pods_cidr" {

type = string

}

variable "pods_cidr_name" {

type = string

default = "gke-standard-pods"

}

variable "services_cidr" {

type = string

}

variable "services_cidr_name" {

type = string

default = "gke-standard-services"

}

variable "network_auto_cidr" {

type = string

}

variable "pods_auto_cidr" {

type = string

}

variable "pods_auto_cidr_name" {

type = string

default = "gke-auto-pods"

}

variable "services_auto_cidr" {

type = string

}

variable "services_auto_cidr_name" {

type = string

default = "gke-auto-services"

}

EOF

Define outputs:

cat <<EOF >> outputs.tf

output "subnet_selflink" {

value = "\${google_compute_subnetwork.gke_standard_subnet.self_link}"

}

output "subnet_auto_selflink" {

value = "\${google_compute_subnetwork.gke_auto_subnet.self_link}"

}

EOF

Task N1: Update terraform.tfvars file values with following information:

- Node Range: See column

subnetin above table fordevcluster - GKE Standard Secondary Ranges:

- Service range CIDR: See column

srv rangein above table fordevcluster - Pods range CIDR: See column

pod rangein above table fordevcluster

- Service range CIDR: See column

- GKE Auto Secondary Ranges:

- Service range CIDR: See column

srv rangein above table fordevcluster - Pods range CIDR: See column

pod rangein above table fordevcluster

- Service range CIDR: See column

Table 1

Project | Subnet Name | subnet | pod range | srv range | kubectl api range

app 1 Dev | gke-standard | 10.130.0.0/24 | 10.0.0.0/16 | 10.100.0.0/23 | 172.16.0.0/28

| gke-auto | 10.131.0.0/24 | 10.1.0.0/16 | 10.100.2.0/23 | 172.16.0.16/28

edit terraform.tfvars

Update the file with values according to VPC subnet design in

#gke-standard subnet vars

network_cidr = "TODO"

pods_cidr = "TODO"

services_cidr = "TODO"

#gke-auto subnet vars

network_auto_cidr = "TODO"

pods_auto_cidr = "TODO"

services_auto_cidr = "TODO"

EOF

Review TF Plan:

terraform plan -var-file terraform.tfvars

Create VPC:

terraform apply -var-file terraform.tfvars

Review created subnet:

export ORG=$student_name

export PRODUCT=notepad

export ENV=dev

gcloud compute networks subnets list

gcloud compute networks subnets describe gke-standard-$ORG-$PRODUCT-$ENV-subnet --region us-central1

gcloud compute networks subnets describe gke-auto-$ORG-$PRODUCT-$ENV-subnet --region us-central1

Example of the Output:

enableFlowLogs: false

fingerprint: sWtjHJyqrM8=

gatewayAddress: 10.130.0.1

id: '251139681714314790'

ipCidrRange: 10.130.0.0/24

kind: compute#subnetwork

logConfig:

enable: false

name: gke-standard-archy-notepad-dev-subnet

network: https://www.googleapis.com/compute/v1/projects/archy-notepad-dev-898/global/networks/vpc-archy-notepad-dev

privateIpGoogleAccess: false

privateIpv6GoogleAccess: DISABLE_GOOGLE_ACCESS

purpose: PRIVATE

region: https://www.googleapis.com/compute/v1/projects/archy-notepad-dev-898/regions/us-central1

secondaryIpRanges:

- ipCidrRange: 10.0.0.0/16

rangeName: gke-standard-pods

- ipCidrRange: 10.100.0.0/23

rangeName: gke-standard-services

selfLink: https://www.googleapis.com/compute/v1/projects/archy-notepad-dev-898/regions/us-central1/subnetworks/gke-standard-archy-notepad-dev-subnet

stackType: IPV4_ONLY

Also check in Google cloud UI:

Networking->VPC Networks -> Click VPC network and check `Subnet` tab

Task N2: Update subnets.tf so that google_compute_subnetwork resource supports following features:

* Flow Logs Configuration

* Aggregation interval: 15 min # reduce cost for VPC Flow logging, as by default interval is 5 second

* Flow logs Metadata Config: EXCLUDE_ALL_METADATA

* Private IP Google Access

Hint

Use google_compute_subnetwork resource to update subnet configurations with terraform.

edit subnets.tf

TODO

Review TF Plan:

terraform plan -var-file terraform.tfvars

Create VPC:

terraform apply -var-file terraform.tfvars

Review created subnet:

gcloud compute networks subnets describe gke-standard-$ORG-$PRODUCT-$ENV-subnet --region us-central1

gcloud compute networks subnets describe gke-auto-$ORG-$PRODUCT-$ENV-subnet --region us-central1

Example of the Output:

creationTimestamp: '2022-10-03T19:56:09.579-07:00'

enableFlowLogs: true

fingerprint: Y8-BjoK1gK8=

gatewayAddress: 10.130.0.1

id: '251139681714314790'

ipCidrRange: 10.130.0.0/24

kind: compute#subnetwork

logConfig:

aggregationInterval: INTERVAL_15_MIN

enable: true

filterExpr: 'true'

flowSampling: 0.5

metadata: EXCLUDE_ALL_METADATA

name: gke-standard-archy-notepad-dev-subnet

network: https://www.googleapis.com/compute/v1/projects/archy-notepad-dev-898/global/networks/vpc-archy-notepad-dev

privateIpGoogleAccess: true

privateIpv6GoogleAccess: DISABLE_GOOGLE_ACCESS

purpose: PRIVATE

region: https://www.googleapis.com/compute/v1/projects/archy-notepad-dev-898/regions/us-central1

secondaryIpRanges:

- ipCidrRange: 10.0.0.0/16

rangeName: gke-standard-pods

- ipCidrRange: 10.100.0.0/23

rangeName: gke-standard-services

selfLink: https://www.googleapis.com/compute/v1/projects/archy-notepad-dev-898/regions/us-central1/subnetworks/gke-standard-archy-notepad-dev-subnet

stackType: IPV4_ONLY

1.3 Create a Cloud router¶

Create Cloud Router for custom mode network (VPC), in the same region as the instances that will use Cloud NAT. Cloud NAT is only used to place NAT information onto the VMs. It is not used as part of the actual NAT gateway.

Task N3: Define a google_compute_router inside router.tf that will be able to create a NAT router so the nodes can reach DockerHub and external APIs from private cluster, using following parameters:

- Create router for custom

vpc_networkcreated above with terraform - Same project as VPC

- Same region as VPC

- Router name:

gke-net-router - Local BGP Autonomous System Number (ASN): 64514

Use following reference documentation to create Cloud Router Resource with terraform.

Hint

You can automatically recover vpc name from terraform output like this: google_compute_network.vpc_network.self_link.

edit router.tf

TODO

EOF

Save the populated file.

Review TF Plan:

terraform plan -var-file terraform.tfvars

Create Cloud Router:

terraform apply -var-file terraform.tfvars

Verify created Cloud Router:

CLI:

gcloud compute routers list

gcloud compute routers describe gke-net-router --region us-central1

Output:

bgp:

advertiseMode: DEFAULT

asn: 64514

keepaliveInterval: 20

kind: compute#router

name: gke-net-router

UI:

Networking -> Hybrid Connectivity -> Cloud Routers

Result

Router resource has been created for VPC Network

1.4 Create a Cloud Nat¶

Set up a simple Cloud Nat configuration using google_compute_router_nat resource, which will automatically allocates the necessary external IP addresses to provide NAT services to a region.

When you use auto-allocation, Google Cloud reserves IP addresses in your project automatically.

cat <<EOF >> cloudnat.tf

resource "google_compute_router_nat" "gke_cloud_nat" {

project = var.gcp_project_id

name = "gke-cloud-nat"

router = google_compute_router.gke_net_router.name

region = var.gcp_region

nat_ip_allocate_option = "AUTO_ONLY"

source_subnetwork_ip_ranges_to_nat = "ALL_SUBNETWORKS_ALL_IP_RANGES"

}

EOF

Review TF Plan:

terraform plan -var-file terraform.tfvars

Create Cloud Router:

terraform apply -var-file terraform.tfvars

Verify created Cloud Nat:

CLI:

# List available Cloud Nat Routers

gcloud compute routers nats list --router gke-net-router --router-region us-central1

# Describe Cloud Nat Routers `gke-cloud-nat`:

gcloud compute routers nats describe gke-cloud-nat --router gke-net-router --router-region us-central1

Output:

enableEndpointIndependentMapping: true

endpointTypes:

- ENDPOINT_TYPE_VM

icmpIdleTimeoutSec: 30

name: gke-cloud-nat

natIpAllocateOption: AUTO_ONLY

sourceSubnetworkIpRangesToNat: ALL_SUBNETWORKS_ALL_IP_RANGES

tcpEstablishedIdleTimeoutSec: 1200

tcpTransitoryIdleTimeoutSec: 30

udpIdleTimeoutSec: 30

UI:

Networking -> Network Services -> Cloud NAT

Result

A NAT service created in a router

Task N4: Additionally turn ON logging feature for ALL log types of communication for Cloud Nat

edit cloudnat.tf

TODO

Review TF Plan:

terraform plan -var-file terraform.tfvars

Update Cloud Nat Configuration:

terraform apply -var-file terraform.tfvars

Output:

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

gcloud compute routers nats describe gke-cloud-nat --router gke-net-router --router-region us-central1

Result

Cloud Nat now supports Logging. Cloud NAT logging allows you to log NAT connections and errors. When Cloud NAT logging is enabled, one log entry can be generated for each of the following scenarios:

- When a network connection using NAT is created.

- When a packet is dropped because no port was available for NAT.

1.5 Create a Private GKE Cluster and delete default node pool¶

1.5.1 Enable GCP Beta Provider¶

In order to create a GKE cluster with terraform we will be leveraging google_container_cluster resource.

Some of google_container_cluster arguments, such VPC-Native networking mode, VPA, Istio, CSI Driver add-ons, requires google-beta Provider.

The google-beta provider is distinct from the google provider in that it supports GCP products and features that are in beta, while google does not. Fields and resources that are only present in google-beta will be marked as such in the shared provider documentation.

Configure and Initialize GCP Beta Provider, similar to how we did it for GCP Provider in 1.3.3 Initialize Terraform update provider.tf and main.tf configuration files.

rm provider.tf

cat <<EOF >> provider.tf

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "~> 4.37.0"

}

google-beta = {

source = "hashicorp/google-beta"

version = "~> 4.37.0"

}

}

}

EOF

cat <<EOF >> main.tf

provider "google-beta" {

project = var.gcp_project_id

region = var.gcp_region

}

EOF

Initialize google-beta provider plugin:

terraform init

Success

Terraform has been successfully initialized!

1.5.2 Enable Kubernetes Engine API¶

Kubernetes Engine API used to build and manages container-based applications, powered by the open source Kubernetes technology. Before starting GKE cluster creation it is required to enable it.

Task N5: Enable container.googleapis.com in services.tf file similar to what we already did in 2.2 Enable required GCP Services API. Make sure that service is set to disable_on_destroy=false, which helps to prevent errors during redeployments of the system (aka immutable infra)

edit services.tf

TODO

Note

Adding disable_on_destroy=false helps to prevent errors during redeployments of the system.

Review TF Plan:

terraform plan -var-file terraform.tfvars

Update Cloud Nat Configuration:

terraform apply -var-file terraform.tfvars

1.5.3 Create a Private GKE Cluster and delete default node pool¶

Using Terraform resource google_container_cluster resource create a Regional, Private GKE cluster, with following characteristics:

Cluster Configuration:

- Cluster name:

gke-$ORG-$PRODUCT-$ENV - GKE Control plane is replicated across three zones of a region:

us-central1 - Private cluster with unrestricted access to the public endpoint:

- Cluster Nodes access: Private Node GKE Cluster with Public API endpoint

- Cluster K8s API access: with unrestricted access to the public endpoint

- Cluster Node Communication:

VPC Native - Secondary pod range with name:

gke-standard-pods - Secondary service range with name:

gke-standard-services - GKE Release channel:

regular - GKE master and node version: "1.22.12-gke.300"

- Terraform Provider:

google-beta - Timeouts to finish creation of cluster and deletion of default node pool: 30M

- Features:

- Enable Cilium based Networking DataplaneV2:

enable-dataplane-v2 - Configure Workload Identity Pool:

PROJECT_ID.svc.id.goog - Enable HTTP Load-balancing Addon:

http_load_balancing

- Enable Cilium based Networking DataplaneV2:

Terraform resource google_container_node_pool creates a custom GKE Node Pool.

Custom Node Pool Configuration:

- The name of a GCE machine (VM) type:

e2-micro - Node count: 1 per zone

- Node images:

Container-Optimized OS - GKE Node Pool Boot Disk Size: "100 Gb"

Note

Why delete default node pool? The default node pools cause trouble with managing the cluster, when created with terraform as it is not part of the terraform lifecycle. GKE Architecture Best Practice recommends to delete default node pool and create a custom one instead and manage the node pools explicitly.

Note

Why define Timeouts for gke resource? Normally GKE creation takes few minutes. However, in our case we creating GKE Cluster, and then system cordon, drain and then destroy default node pool. This process may take 10-20 minutes and we want to make sure terraform will not time out during this time.

Step 1: Let's define GKE resource first:

edit gke.tf

resource "google_container_cluster" "primary_cluster" {

provider = google-beta

project = var.gcp_project_id

name = format("gke-standard-%s-%s-%s", var.org, var.product, var.environment)

min_master_version = var.kubernetes_version

network = google_compute_network.vpc_network.self_link

subnetwork = google_compute_subnetwork.gke_standard_subnet.self_link

location = var.gcp_region

logging_service = var.logging_service

monitoring_service = var.monitoring_service

remove_default_node_pool = true

initial_node_count = 1

private_cluster_config {

enable_private_nodes = var.enable_private_nodes

enable_private_endpoint = var.enable_private_endpoint

master_ipv4_cidr_block = var.master_ipv4_cidr_block

}

# Enable Dataplane V2

datapath_provider = "ADVANCED_DATAPATH"

release_channel {

channel = "REGULAR"

}

addons_config {

http_load_balancing {

disabled = var.disable_http_load_balancing

}

ip_allocation_policy {

cluster_secondary_range_name = var.pods_cidr_name

services_secondary_range_name = var.services_cidr_name

}

timeouts {

create = "30m"

update = "30m"

delete = "30m"

}

workload_identity_config {

workload_pool = "${var.gcp_project_id}.svc.id.goog"

}

}

Step 2: Next define GKE cluster specific variables:

cat <<EOF >> gke_variables.tf

# variables used to create GKE Cluster Control Plane

variable "kubernetes_version" {

default = ""

type = string

description = "The GKE version of Kubernetes"

}

variable "logging_service" {

description = "The logging service that the cluster should write logs to."

default = "logging.googleapis.com/kubernetes"

}

variable "monitoring_service" {

default = "monitoring.googleapis.com/kubernetes"

description = "The GCP monitoring service scope"

}

variable "disable_http_load_balancing" {

default = false

description = "Enable HTTP Load balancing GCP integration"

}

variable "pods_range_name" {

description = "The pre-defined IP Range the Cluster should use to provide IP addresses to pods"

default = ""

}

variable "services_range_name" {

description = "The pre-defined IP Range the Cluster should use to provide IP addresses to services"

default = ""

}

variable "enable_private_nodes" {

default = false

description = "Enable Private-IP Only GKE Nodes"

}

variable "enable_private_endpoint" {

default = false

description = "When true, the cluster's private endpoint is used as the cluster endpoint and access through the public endpoint is disabled."

}

variable "master_ipv4_cidr_block" {

description = "The ipv4 cidr block that the GKE masters use"

}

variable "release_channel" {

type = string

default = ""

description = "The release channel of this cluster"

}

EOF

Step 3: Define GKE cluster specific outputs:

edit outputs.tf

Add following outputs and save file:

output "id" {

value = "${google_container_cluster.primary_cluster.id}"

}

output "endpoint" {

value = "${google_container_cluster.primary_cluster.endpoint}"

}

output "master_version" {

value = "${google_container_cluster.primary_cluster.master_version}"

}

Task N6: Complete terraform.tfvars with required values to GKE Cluster specified above:

edit terraform.tfvars

//gke specific

enable_private_nodes = "TODO"

master_ipv4_cidr_block = "TODO" # Using Table 1 `kubectl api range` for GKE Standard

kubernetes_version = "TODO" # From GKE Cluster requirements

release_channel = "TODO" # From GKE Cluster requirements

EOF

In the next step, we going to create a custom GKE Node Pool.

1.5.4 Create a GKE custom Node pool¶

Using google_container_node_pool resource create a custom GKE Node Pool with following characteristics:

Node Pool Configuration:

Custom Node Pool Configuration:

- The name of a GCE machine (VM) type:

e2-micro - Node count: 1 per zone

- Node images:

Container-Optimized OS - GKE Node Pool Boot Disk Size: "100 Gb"

Step 1: Let's define GKE resource first:

cat <<EOF >> gke.tf

#Node Pool Resource

resource "google_container_node_pool" "custom-node_pool" {

name = "main-pool"

location = var.gcp_region

project = var.gcp_project_id

cluster = google_container_cluster.primary_cluster.name

node_count = var.gke_pool_node_count

version = var.kubernetes_version

node_config {

image_type = var.gke_pool_image_type

disk_size_gb = var.gke_pool_disk_size_gb

disk_type = var.gke_pool_disk_type

machine_type = var.gke_pool_machine_type

}

timeouts {

create = "10m"

delete = "10m"

}

lifecycle {

ignore_changes = [

node_count

]

}

}

EOF

Step 2: Next define GKE cluster specific variables:

cat <<EOF >> gke_variables.tf

#Node Pool specific variables

variable "gke_pool_machine_type" {

type = string

}

variable "gke_pool_node_count" {

type = number

}

variable "gke_pool_disk_type" {

type = string

default = "pd-standard"

}

variable "gke_pool_disk_size_gb" {

type = string

}

variable "gke_pool_image_type" {

type = string

}

EOF

Task 7 (Continued): Complete terraform.tfvars with required values to GKE Node Pool values specified above:

edit terraform.tfvars

//pool specific

gke_pool_node_count = "TODO"

gke_pool_image_type = "TODO"

gke_pool_disk_size_gb = "TODO"

gke_pool_machine_type = "TODO"

Step 3: Review TF Plan:

terraform plan -var-file terraform.tfvars

Step 4: Create GKE Cluster and Node Pool:

terraform apply -var-file terraform.tfvars

Output:

google_container_cluster.primary_cluster: Creating...

...

google_container_cluster.primary_cluster: Creation complete after 20m9s

google_container_node_pool.custom-node_pool: Creating...

google_container_node_pool.custom-node_pool: Creation complete after 2m10s

Note

GKE Cluster Control plain and deletion of default Node Pool may take about 12 minutes,

creation custom Node Pool another 6 minutes.

Verify Cluster has been created:

export ORG=$student_name

export PRODUCT=notepad

export ENV=dev

gcloud container clusters list

gcloud compute networks subnets describe gke-standard-$ORG-$PRODUCT-$ENV --region us-central1

1.5.5 Update GKE Node Pool to support Auto Upgrade and Auto Recovery features¶

Note

GKE Master Nodes are managed by Google and get's upgraded automatically. Users can only specify Maintenance Window if they have preference for that process to occur (e.g. after busy hours). Users can however control Node Pool upgrade lifecycle. They can choose to do it themselves or with Auto Upgrade.

Task N8: Using google_container_node_pool resource update node pool to turn-off Auto Upgrade and Auto Repair features that enabled by default on Release Channels.

edit gke.tf

TODO

Solution: According to GCP documentations it is only possible to disable auto-upgrade by Unsubscribing from a release channel.

Replace channel release from REGULAR to UNSPECIFIED in edit gke.tf and turn off auto_repair and auto_upgrade

edit gke.tf

release_channel {

channel = "UNSPECIFIED"

}

management {

auto_repair = false

auto_upgrade = false

}

Step 3: Review TF Plan:

terraform plan -var-file terraform.tfvars

No errors.

Step 4: Update GKE Cluster Node Pool configuration:

terraform apply -var-file terraform.tfvars

Summary

Congrats! You've now learned how to deploy production grade GKE clusters.

1.6 Create Auto Mode GKE Cluster with Terraform¶

Step 1: Define GKE Autopilot resource first:

cat <<EOF >> gke_auto.tf

resource "google_container_cluster" "auto_cluster" {

provider = google-beta

project = var.gcp_project_id

name = format("gke-auto-%s-%s-%s", var.org, var.product, var.environment)

min_master_version = var.kubernetes_version

network = google_compute_network.vpc_network.self_link

subnetwork = google_compute_subnetwork.gke_auto_subnet.self_link

location = var.gcp_region

logging_service = var.logging_service

monitoring_service = var.monitoring_service

# Enable Autopilot for this cluster

enable_autopilot = true

# Private Autopilot GKE cluster

private_cluster_config {

enable_private_nodes = var.enable_private_nodes

enable_private_endpoint = var.enable_private_endpoint

master_ipv4_cidr_block = var.auto_master_ipv4_cidr_block

}

# Configuration options for the Release channel feature, which provide more control over automatic upgrades of your GKE clusters.

release_channel {

channel = "REGULAR"

}

# Configuration of cluster IP allocation for VPC-native clusters

ip_allocation_policy {

cluster_secondary_range_name = var.pods_auto_cidr_name

services_secondary_range_name = var.services_auto_cidr_name

}

timeouts {

create = "20m"

update = "20m"

delete = "20m"

}

}

Step 2: Next define GKE cluster specific variables:

cat <<EOF >> gke_variables.tf

# variables used to create GKE AutoPilot Cluster Control Plane

variable "auto_master_ipv4_cidr_block" {

description = "The ipv4 cidr block that the GKE masters use"

}

EOF

Step 3: Define GKE cluster specific outputs:

edit outputs.tf

Add following outputs and save file:

output "autopilot_id" {

value = "${google_container_cluster.auto_cluster.id}"

}

output "autopilot_endpoint" {

value = "${google_container_cluster.auto_cluster.endpoint}"

}

output "autopilot_master_version" {

value = "${google_container_cluster.auto_cluster.master_version}"

}

Update tfvars

edit terraform.tfvars

//gke autopilot specific

auto_master_ipv4_cidr_block = "172.16.0.16/28"

Step 4: Review TF Plan:

terraform plan -var-file terraform.tfvars

Step 5: Create GKE Autopilot Cluster:

terraform apply -var-file terraform.tfvars

Output:

google_container_cluster.auto_cluster: Still creating..

...

google_container_cluster.auto_cluster: Creation complete after 7m58s

Verify Cluster has been created:

export ORG=$student_name

export PRODUCT=notepad

export ENV=dev

gcloud container clusters list

gcloud compute networks subnets describe gke-standard-$ORG-$PRODUCT-$ENV --region us-central1

Task 9: Using google_container_cluster make sure that Maintenance Windows are set to occur Daily on weekdays from 9:00-17:00 UTC-4, but skip weekends, starting from October 2nd:

--maintenance-window-start 2022-10-02T09:00:00-04:00 \

--maintenance-window-end 2022-10-02T17:00:00-04:00 \

--maintenance-window-recurrence 'FREQ=WEEKLY;BYDAY=MO,TU,WE,TH,FR'

edit gke_auto.tf

TODO

Step 6: Review TF Plan:

terraform plan -var-file terraform.tfvars

Step 7: Create GKE Autopilot Cluster:

terraform apply -var-file terraform.tfvars

google_container_cluster.auto_cluster: Modifying... [id=projects/archy-notepad-dev-898/locations/us-central1/clusters/gke-auto-archy-notepad-dev]

google_container_cluster.auto_cluster: Modifications complete after 2s [id=projects/archy-notepad-dev-898/locations/us-central1/clusters/gke-auto-archy-notepad-dev]

Browse GKE UI and verify that GKE Autopilot is configured with Maintenance window.

Success

We can now create GKE Autopilot cluster

1.7 (Optional) Repeatable Infrastructure¶

When you doing IaC it is important to insure that you can both create and destroy resources consistently. This is especially important when doing CI/CD testing.

Step 3: Destroy all resources:

terraform destroy -var-file terraform.tfvars

No errors.

Step 4: Recreate all resources:

terraform plan -var-file terraform.tfvars

terraform apply -var-file terraform.tfvars

1.8 Create Documentation for terraform code¶

Documentation for your terraform code is an important part of IaC. Make sure all your variables have a good description!

There are community tools that have been developed to make the documentation process smoother, in terms of documenting Terraform resources and requirements.Its good practice to also include a usage example snippet.

Terraform-Docs is a good example of one tool that can generate some documentation based on the description argument of your Input Variables, Output Values, and from your required_providers configurations.

Task N10: Create Terraform Documentation for your infrastructure.

TODO

Step 1 Install the terraform-docs cli to your Google CloudShell environment:

curl -sSLo ./terraform-docs.tar.gz https://terraform-docs.io/dl/v0.16.0/terraform-docs-v0.16.0-$(uname)-amd64.tar.gz

tar -xzf terraform-docs.tar.gz

chmod +x terraform-docs

sudo mv terraform-docs /usr/local/bin/

terraform-docs

Generating terraform documentation with Terraform Docs:

cd ~/$student_name-notepad/ycit020_module4/foundation-infrastructure

terraform-docs markdown . > README.md

cd ~/$student_name-notepad/ycit020_module4/notepad-infrastructure

terraform-docs markdown . > README.md

Verify created documentation:

edit README.md

1.9 Commit Readme doc to repository and share it with Instructor/Teacher¶

Step 1 Commit ycit020_module4 folder using the following Git commands:

cd ~/$student_name-notepad

git add .

git commit -m "TF manifests for Module 4 Assignment"

Step 2 Push commit to the Cloud Source Repositories:

git push origin master

Result

Your instructor will be able to review you code and grade it.

1.10 Cleanup¶

We only going to cleanup GCP Service foundation layer, as we going to use GCP project in future.

cd ~/$student_name-notepad/ycit020_module4/notepad-infrastructure

terraform destroy -var-file terraform.tfvars

2. Workaround for Project Quota issue¶

If you see following error during project creation in foundation layer:

Error: Error setting billing account "010BE6-CA1129-195D77" for project "projects/ayrat-notepad-dev-244": googleapi: Error 400: Precondition check failed., failedPrecondition

This is due to our Billing account has quota of 5 projects per account.

To solve this issue find all unused accounts:

gcloud beta billing projects list --billing-account $ACCOUNT_ID

And unlink them, so you have less then 5 projects per account:

gcloud beta billing projects unlink $PROJECT_ID