Deploy Applications on Kubernetes¶

Objective:

- Review process of creating K8s:

- Liveness Probes

- Readiness Probes

- Secrets

- ConfigMaps

- Requests

- Limits

- HPA

- VPA

Prepare the Cloud Source Repository Environment with Module 6 Assignment¶

This lab can be executed in you GCP Cloud Environment using Google Cloud Shell.

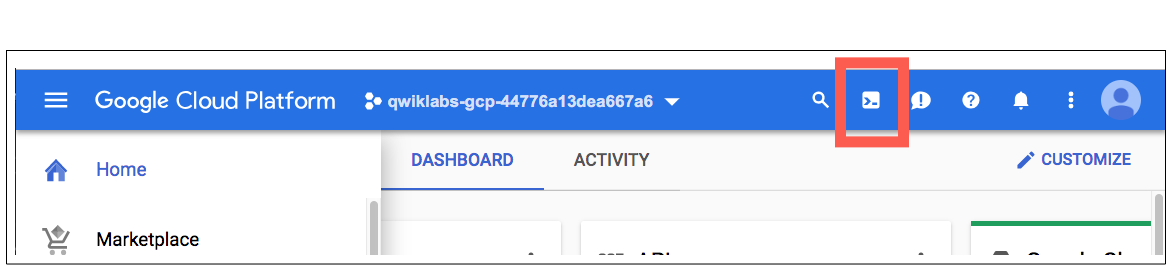

Open the Google Cloud Shell by clicking on the icon on the top right of the screen:

Once opened, you can use it to run the instructions for this lab.

Cloud Source Repositories: Qwick Start

Step 1 Locate directory where kubernetes YAML manifest going to be stored.

cd ~/ycit019_2022/

git pull # Pull latest Mod9_assignment

In case you don't have this folder clone it as following:

cd ~

git clone https://github.com/Cloud-Architects-Program/ycit019_2022

cd ~/ycit019_2022/Mod9_assignment/

ls

Step 2 Go into the local repository you've created:

export student_name=<write_your_name_here_and_remove_brakets>

Important

Replace above with your project_id student_name

cd ~/$student_name-notepad

Step 3 Copy Mod9_assignment folder to your repo:

git pull # Pull latest code from you repo

cp -r ~/ycit019_2022/Mod9_assignment/ .

Step 4 Commit Mod9_assignment folder using the following Git commands:

git status

git add .

git commit -m "adding `Mod9_assignment` with kubernetes YAML manifest"

Step 5 Once you've committed code to the local repository, add its contents to Cloud Source Repositories using the git push command:

git push origin master

Step 6 Review Cloud Source Repositories

Use the Google Cloud Source Repositories code browser to view repository files.

You can filter your view to focus on a specific branch, tag, or comment.

Browse the Mod9_assignment files you pushed to the repository by opening the Navigation menu and selecting Source Repositories:

Click Menu -> Source Repositories > Source Code.

Result

The console shows the files in the master branch at the most recent commit.

1.1 Create GKE Cluster with Cluster and Vertical Autoscaling Support¶

Step 1 Enable the Google Kubernetes Engine API.

gcloud services enable container.googleapis.com

Step 2 From the cloud shell, run the following command to create a cluster with two nodes:

gcloud container clusters create k8s-features \

--zone us-central1-c \

--enable-vertical-pod-autoscaling \

--num-nodes 2 \

--enable-autoscaling --min-nodes 1 --max-nodes 3

Output:

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

k8s-features us-central1-c 1.19.9-gke.1400 34.121.222.83 e2-medium 1.19.9-gke.1400 2 RUNNING

Step 3 Authenticate to the cluster.

gcloud container clusters get-credentials k8s-features --zone us-central1-c

2.1 Externalize Web Application Configuration¶

Let’s make some minor modifications to the web application to externalize its configuration, and make it easier to manage and update at deployment time.

Step 1: Move config file outside compiled application

First, let’s move the web application’s configuration into a folder outside the main compilation path

cd ~

mkdir ~/$student_name-notepad/Mod9_assignment/gowebapp/config

cp ~/$student_name-notepad/Mod9_assignment/gowebapp/code/config/config.json \

~/$student_name-notepad/Mod9_assignment/gowebapp/config

Remove config folder that is located in code directory

rm -rf ~/$student_name-notepad/Mod9_assignment/gowebapp/code/config

ls ~/$student_name-notepad/Mod9_assignment/gowebapp

Result

Your gowebapp folder should look like following:

code config Dockerfile

Step 2: Modify app to support setting DB password through environment variable Next, let’s make a minor modification to the Go application code to allow setting the DB password through an environment variable. This will make it easier to dynamically inject this value at deployment time.

Use a text editor of your choice (edit, VS code) to modify:

edit ~/$student_name-notepad/Mod9_assignment/gowebapp/code/vendor/app/shared/database/database.go

- Add an import for the

"os"package at line 8. After making this change, your imports list will look like the following:

import (

"encoding/json"

"fmt"

"log"

"time"

"os"

"github.com/boltdb/bolt"

_ "github.com/go-sql-driver/mysql" // MySQL driver

"github.com/jmoiron/sqlx"

"gopkg.in/mgo.v2"

)

- Add the following code at line 89 after

var err error:

// Check for MySQL Password environment variable and update configuration if present

if os.Getenv("DB_PASSWORD") != "" {

d.MySQL.Password = os.Getenv("DB_PASSWORD")

}

2.2 Build new Docker image for your frontend application¶

Step 1 Set the Project ID in Environment Variable:

export PROJECT_ID=<project_id>

Step 2: Update Dockerfile for your gowebapp frontend application and

define environment variable declaration for a default DB_PASSWORD as well

as add a volume declaration for the container configuration path

cd ~/$student_name-notepad/Mod9_assignment/gowebapp

edit Dockerfile

FROM golang:1.16.4

LABEL maintainer "student@mcgill.ca"

LABEL gowebapp "v1"

EXPOSE 80

ENV GO111MODULE=auto

ENV GOPATH=/go

ENV PASSWORD=rootpasswd

COPY /code $GOPATH/src/gowebapp/

WORKDIR $GOPATH/src/gowebapp/

RUN go get && go install

VOLUME $GOPATH/src/gowebapp/config

ENTRYPOINT $GOPATH/bin/gowebapp

Step 2: Build updated gowebapp Docker image locally

cd ~/$student_name-notepad/Mod9_assignment/gowebapp

Build and push the gowebapp image to GCR. Make sure to include “.“ at the end of build command.

docker build -t gcr.io/${PROJECT_ID}/gowebapp:v3 .

docker push gcr.io/${PROJECT_ID}/gowebapp:v3

2.3 Run and test new Docker image locally¶

Before deploying to Kubernetes, let’s test the updated gowebapp Docker image locally, to ensure that the frontend and backend containers run and integrate properly.

Step 1: Launch frontend and backend containers

First, we launch the backend database container, using a previously created Docker image, as it will take a bit longer to startup, and the frontend container depends on it.

Note

Update user-name with command below with you docker-hub id

docker network create gowebapp -d bridge

docker run --net gowebapp --name gowebapp-mysql --hostname \

gowebapp-mysql -d -e MYSQL_ROOT_PASSWORD=rootpasswd gcr.io/${PROJECT_ID}/gowebapp-mysql:v1

Step 2: Now launch a frontend container using the updated gowebapp image, mapping the container port 80 - where the web application is exposed - to port 30005 on the host machine. Notice how we're mapping a host volume into the container for configuration, and setting a container environment variable with the MySQL DB password:

Note

Update user-name with command below with you docker-hub id

docker run -p 8080:80 \

-v ~/$student_name-notepad/Mod9_assignment/gowebapp/config:/go/src/gowebapp/config \

--net gowebapp -d --name gowebapp \

--hostname gowebapp gcr.io/${PROJECT_ID}/gowebapp:v3

Step 3 Test the application locally

Now that we've launched the application containers, let's try to test the web application locally.

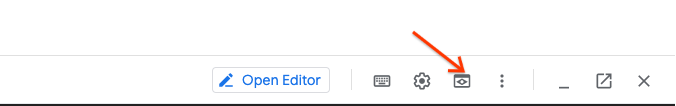

You should be able to access the application at Google Cloud Web Preview Console:

Note

Web Preview using port 8080 by default. If you application using other port, you can edit this as needed.

Step 4 Create an account and login. Write something on your Notepad and save it. This will verify that the application is working and properly integrates with the backend database container.

Result

By externalizing application configuration, you have made it easier to manage and modify your application configuration at deployment time. This will be very helpful as we deploy our applications to Kubernetes

Step 5 Cleanup environment

docker rm -f $(docker ps -q)

docker network rm gowebapp

3.1 Create a Secret¶

Step 1 Base64 Encode MySQL password rootpasswd. See Lab 8 for more details.

echo -n "rootpasswd" | base64

Result

MySQL password has been Base64 Encoded

Step 2 Edit a secret for the MySQL password

cd ~/$student_name-notepad/Mod9_assignment/deploy

edit secret-mysql.yaml

kind: Secret

apiVersion: v1

# TODO1 Create secret name: mysql

# TODO2 Secret should be using arbitrary user defined type stringData: https://kubernetes.io/docs/concepts/configuration/secret/#secret-types

# TODO3 Define Mysql Password in base64 encoded format

kubectl apply -f secret-mysql.yaml

kubectl describe secret mysql

Step 2 Update gowebapp-mysql-deployment.yaml under

~/$student_name-notepad/Mod9_assignment/deploy

edit ~/$student_name-notepad/Mod9_assignment/deploy/gowebapp-mysql-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gowebapp-mysql

labels:

run: gowebapp-mysql

tier: backend

spec:

replicas: 1

selector:

matchLabels:

run: gowebapp-mysql

strategy:

type: Recreate

template:

metadata:

labels:

run: gowebapp-mysql

spec:

containers:

- env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

#TODO: replace value: rootpasswd with secretKeyRef

#TODO: name is mysql

#TODO: key is password

image: gcr.io/${PROJECT_ID}/gowebapp-mysql:v1

name: gowebapp-mysql

ports:

- containerPort: 3306

#TODO add a livenessProbe which performs tcpSocket probe

#aginst port 3306 with an initial

# deay of 30 seconds, and a timeout of 2 seconds

#TODO add a readinessProbe for tcpSocket port 3306 with a 25 second

#initial delay, and a timeout of 2 seconds

Step 2 Start the rolling upgrade and record the command used in the rollout history:

kubectl apply -f gowebapp-mysql-deployment.yaml --record

Step 3 Verify that rollout was successful

kubectl rollout status deploy gowebapp-mysql

Step 4 Check if pods are running

kubectl get pods

Step 5 Create a Service object for MySQL

kubectl apply -f gowebapp-mysql-service.yaml --record

Step 6 Check to make sure it worked

kubectl get service -l "run=gowebapp-mysql"

3.2 Create ConfigMap and Probes for gowebapp¶

Step 1: Create ConfigMap for gowebapp's config.json file

cd ~/$student_name-notepad/Mod9_assignment/gowebapp/config/

kubectl create configmap gowebapp --from-file=webapp-config-json=config.json

kubectl describe configmap gowebapp

Note

The entire file contents from config.json are stored under the key webapp-config-json

3.4 Deploy webapp by Referencing Secret, ConfigMap and define Probes¶

Step 1: Update gowebapp-deployment.yaml under ~/$student_name-notepad/Mod9_assignment/deploy/

cd ~/$student_name-notepad/Mod9_assignment/deploy/

edit gowebapp-deployment.yaml

In this exercise, we will add liveness/readiness probes to our deployments. For more information, see here: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-probes/

apiVersion: apps/v1

kind: Deployment

metadata:

name: gowebapp

labels:

run: gowebapp

spec:

replicas: 2

selector:

matchLabels:

run: gowebapp

template:

metadata:

labels:

run: gowebapp

spec:

containers:

- env:

name: DB_PASSWORD

#TODO: replace value: `rootpasswd` with valueFrom:

secretKeyRef:

#TODO: name is mysql

#TODO: key is password

image: gcr.io/${PROJECT_ID}/gowebapp:v3

name: gowebapp

ports:

- containerPort: 80

livenessProbe:

#TODO add a livenessProbe which performs httpGet

#aginst the /register endpoint on port 80 with an initial

# deay of 15 seconds, and a timeout of 5 seconds

#TODO add a livenessProbe which performs httpGet

# aginst the /register endpoint on port 80 with an initial

# deay of 25 seconds, and a timeout of 5 seconds

volumeMounts:

- #TODO: give the volume a name:config-volume

#TODO: specify the mountPath: /go/src/gowebapp/config

volumes:

- #TODO: define volume name: config-volume

configMap:

#TODO: identify your ConfigMap name: gowebapp

items:

- key: webapp-config-json

path: config.json

kubectl apply -f gowebapp-deployment.yaml --record

Result

This will start the rolling upgrade and record the command used in the rollout history

Step 3: Verify that rollout was successful

kubectl rollout status deploy gowebapp

Step 4: Get rollout history

kubectl rollout history deploy gowebapp

Step 5: Get rollout history details for specific revision (use number show in output to previous command)

kubectl rollout history deploy gowebapp --revision=<latest_version_number

Step 6 Check if pods are running

kubectl get pods

Step 7 Create a Service object for gowebapp

kubectl apply -f gowebapp-service.yaml --record

Step 8 Access your application on Public IP via automatically created Loadbalancer

created for gowebapp service.

To get the value of Loadbalancer run following command:

kubectl get svc gowebapp -o wide

Expected output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gowebapp Loadbalancer 10.107.15.39 XXXXXX 9000:32634/TCP 30m

gowebapp-mysql ClusterIP None <none> 3306/TCP 1h

**Step ** Access Loadbalancer IP via browser:

Result

Congrats!!! You've deployed you app to Kubernetes with Secrets, Configmaps and Probes.

4.1 Configure VPA for gowebapp-mysql to find optimal Resource Request and Limits values¶

requests and limits is the way Kubernetes set's QoS for Pods, as well as enable's features like HPA, CA, Resource Quota's and more.

However setting best values for resource requests and limits is hard, VPA is here to help. Set VPA for gowebapp and observe usage recommendation for requests and limits

Step 1 Check our gowebapp-mysql app:

kubectl get deploy

Result

Our Deployment is up, however without request and limits it will be treated as Best Effort QoS resource on the Cluster.

Step 2 Edit a manifest for gowebapp-mysql Vertical Pod Autoscaler resource:

cd ~/$student_name-notepad/Mod9_assignment/deploy

edit gowebapp-mysql-vpa.yaml

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: gowebapp-mysql

spec:

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: gowebapp-mysql

updatePolicy:

updateMode: "Off"

Step 3 Apply the manifest for gowebapp-mysql-vpa

kubectl apply -f gowebapp-mysql-vpa.yaml

Step 4 Wait a minute, and then view the VerticalPodAutoscaler

kubectl describe vpa gowebapp-mysql

Note

If you don't see it, wait a little longer and try the previous command again.

Step 5 Locate the "Container Recommendations" at the end of the output from the describe command.

Result

We will be using Lower Bound values to set our request value and Upper Bound as our limits value.

4.2 Set Recommended Request and Limits values to gowebapp-mysql¶

Step 1 Edit a manifest for gowebapp deployment resource:

cd ~/$student_name-notepad/Mod9_assignment/deploy

edit gowebapp-mysql-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gowebapp-mysql

...

#resources:

#TODO define a resource request and limits based on VPA Recommender

Step 2 Redeploy our application with defined resource request and limits

cd ~/$student_name-notepad/Mod9_assignment/deploy/

kubectl delete -f gowebapp-mysql-deployment.yaml

kubectl apply -f gowebapp-mysql-deployment.yaml

4.3 Configure VPA for gowebapp to find optimal Resource Request and Limits values¶

Step 1: Create ConfigMap for gowebapp's config.json file

Step 2 Deploy gowebapp app under ~/$student_name-notepad/Mod9_assignment/deploy/

cd ~/$student_name-notepad/Mod9_assignment/deploy/

kubectl apply -f gowebapp-service.yaml #Create Service

kubectl apply -f gowebapp-deployment.yaml #Create Deployment

kubectl get deploy

Result

Our Deployment is up, however without request and limits it will be treated as Best Effort QoS resource on the Cluster.

Step 3 Edit a manifest for gowebapp Vertical Pod Autoscaler resource:

cd ~/$student_name-notepad/Mod9_assignment/deploy

edit gowebapp-vpa.yaml

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: gowebapp

#TODO: Ref: https://cloud.google.com/kubernetes-engine/docs/how-to/vertical-pod-autoscaling

#TODO: Configure VPA with updateMode:OFF

Step 4 Apply the manifest for gowebapp-vpa

kubectl apply -f gowebapp-vpa.yaml

Step 5 Wait a minute, and then view the VerticalPodAutoscaler

kubectl describe vpa gowebapp

Note

If you don't see it, wait a little longer and try the previous command again.

Step 6 Locate the "Container Recommendations" at the end of the output from the describe command.

Result

We will be using Lower Bound values to set our request value and Upper Bound as our limits value.

4.4 Set Recommended Request and Limits values to gowebapp¶

Step 1 Edit a manifest for gowebapp deployment resource:

cd ~/$student_name-notepad/Mod9_assignment/deploy

edit gowebapp-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gowebapp

labels:

run: gowebapp

spec:

.....

resources:

#TODO define a resource request and limits based on VPA Recommender

Step 2 Redeploy our application with defined resource request and limits

cd ~/$student_name-notepad/Mod9_assignment/deploy/

kubectl delete -f gowebapp-deployment.yaml

kubectl apply -f gowebapp-deployment.yaml

4.5 Configure HPA for gowebapp¶

Our NotePad Application is going to Production soon. To make sure our application can scale based on requests we will set HPA for our deployment resource using Horizontal Pod Autoscaler.

Step 1 Create HPA for gowebapp based on CPU with minReplicas 1 and maxReplicas 5 with target 50.

cd ~/$student_name-notepad/Mod9_assignment/deploy

cat gowebapp-hpa.yaml

Ref: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: gowebapp-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: gowebapp

minReplicas: 1

maxReplicas: 5

targetCPUUtilizationPercentage: 50

Step 2 Apply the manifest for gowebapp-hpa

kubectl apply -f gowebapp-hpa.yaml

Step 3 Take a closer look at the HPA and observe autoscaling or downscaling if any.

kubectl describe hpa gowebapp

Note

It will take some time to collect metrics information about current cpu usage and since our does't have real load it might not trigger any scaling

5.1 Commit K8s manifests to repository and share it with Instructor/Teacher¶

Step 1 Commit deploy folder using the following Git commands:

git add .

git commit -m "k8s manifests for Hands-on Assignment 4"

Step 2 Push commit to the Cloud Source Repositories:

git push origin master

5.2 Cleaning Up¶

Step 1 Delete the cluster

gcloud container clusters delete k8s-features